Artificial intelligence has been in the news lately, especially generative AI. It seems like every industry is trying to put this technology into their products, including image-processing apps. Michael Rothschild takes a closer look and gives examples in underwater photography.

Contributed by

The idea of generative AI (GAI) is that a system can produce text, images or other media in response to original content and/or prompts (user-supplied instructions to modify the process). Public versions such as ChatGPT or DALL-E are available for online use by anyone. By working with a massive database, parsing the meaning of the prompts, and using a predictive model to figure out what word or other data element is most likely to follow the last one, GAI systems produce impressive results.

There are serious legal and ethical concerns about this. While text GAI can generate flawless output from a purely linguistic point of view, the underlying meaning can be completely wrong or outdated. Furthermore, these systems typically work by harvesting online content, often without the consent of the original creators. These issues are beyond the scope of this article, and fortunately less applicable to the processes described below.

Postproduction

I am an underwater photographer. And while my work is dependent on my diving skills and ability to produce well-lit and composed images in the camera, a huge part of the process has always been postproduction. More than most topside photographers, I spend hours dealing with the image degradation that comes from shooting through a dense medium that absorbs light far more than air, differentially for various points on the visible light spectrum, and with reflective particulate matter suspended in it. I consider that to be part of the art.

So, when Adobe released their beta version of Photoshop that contained a “generative fill” command, I figured I would see what this could do with underwater images. I was very impressed.

Backgrounds

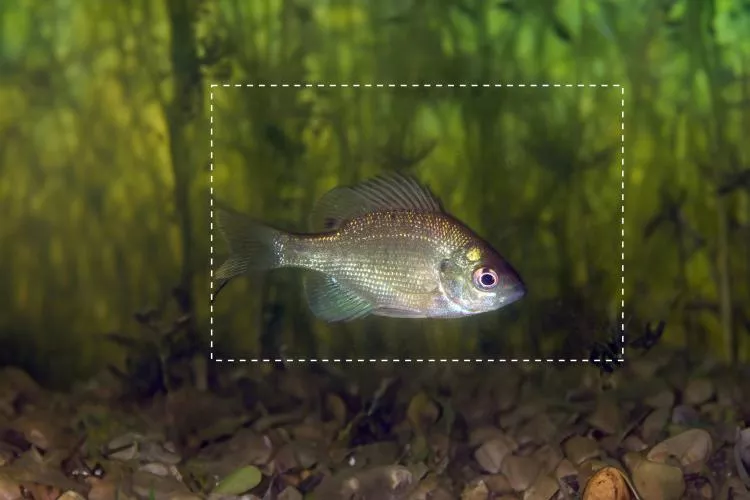

One task that I had often done manually was the adding of extra background. This was necessary if you rotated a photo a small amount for the sake of composition, but you did not want to crop it further because that would make the subject too constrained or cut off. This was easy for small areas with bland backgrounds. If there was a lot of detail in the background, just using something like the clone tool made the image look obviously “faked,” so I worked out other approaches for this. But this only worked for very small areas around the margins. What if we wanted to dramatically increase the negative space? This seemed like a job for GAI, where the computer will actually produce high-resolution image detail based on the original image alone or alongside user-supplied prompts.

Topside. I first tried this without prompts on some topside photos. What you do here is take an image, enlarge the canvas, then select this empty space and a small amount of the original image and apply the generative fill tool. A quicker way of doing this is to use the generative expand tool, which is now part of the crop tool dialogue box—you are essentially doing a reverse crop. You will get three versions to choose from each time you click. The image now fills the entire new canvas, based on what the computer finds at the margins of the selected space.

Image 1 is a topside photo that I loved, but it felt cramped, especially with the hand on the left side of the shot extending right to the border. I had tried expanding the background manually, but it took a lot of work and still did not look great. This was a perfect job for generative fill (Image 2).

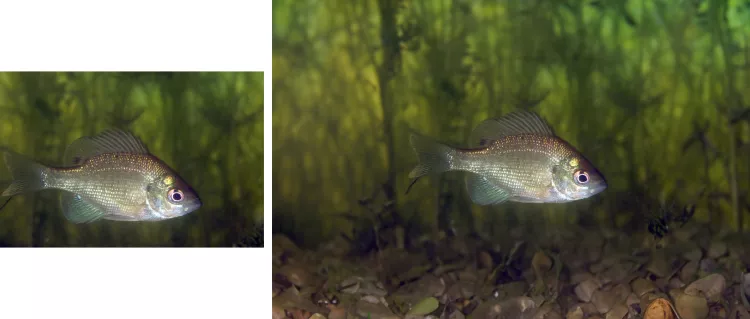

Underwater. For underwater photos, I was able to do a similar manipulation, and I found an additional benefit. If your subject has a little negative space around it, and you only select background pixels of the original image, then you will get the effect of significantly increased water clarity (visibility). The reason for this is that you now have a high resolution image with more background and a relatively smaller subject. BUT, the original subject retains its resolution, detail and contrast.

If you had just backed up from the subject during the dive to produce an image with the same ratio of subject size to canvas size, you would be adding a lot more water between the subject and the lens. And unless you were in crystal-clear water with even ambient lighting (something that virtually never happens), the subject would be dimmer, less contrasty and with less detail due to the water column between the lens and the subject. With GAI, since you have retained the contrast and clarity of the subject, your brain interprets the image as being in much clearer water (Images 3-4 above, and Images 5-6 below).

Horizons

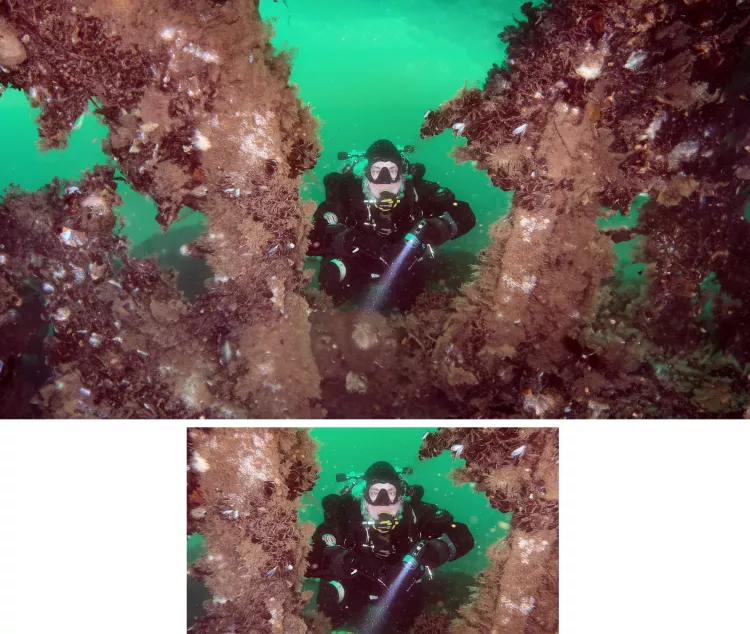

The photos mentioned above show what you get if your subject does not extend to the edges of the original image. If it does, and it is cut at the border, then the system has to try to recreate something more complex than just background. Sometimes this is very accurate, as in this photo of me snorkeling (Images 7 and 8).

See how the system has generated the bottom half of my body underwater. For this one, I did a much larger fill, and the new image included a generated horizon. I did not “ask” for a horizon with a prompt, the system just figured out that a horizon would look right there based on probability and the underlying massive image database.

I am no longer snorkeling in a lake, a few feet off the dock, but apparently in the middle of the ocean! And, since the system is creating new, generated pixels, the resulting image is huge with a lot of zoomable detail and a sharp subject. This image was downsized for online use, but the large files are great for big prints.

Getting creative

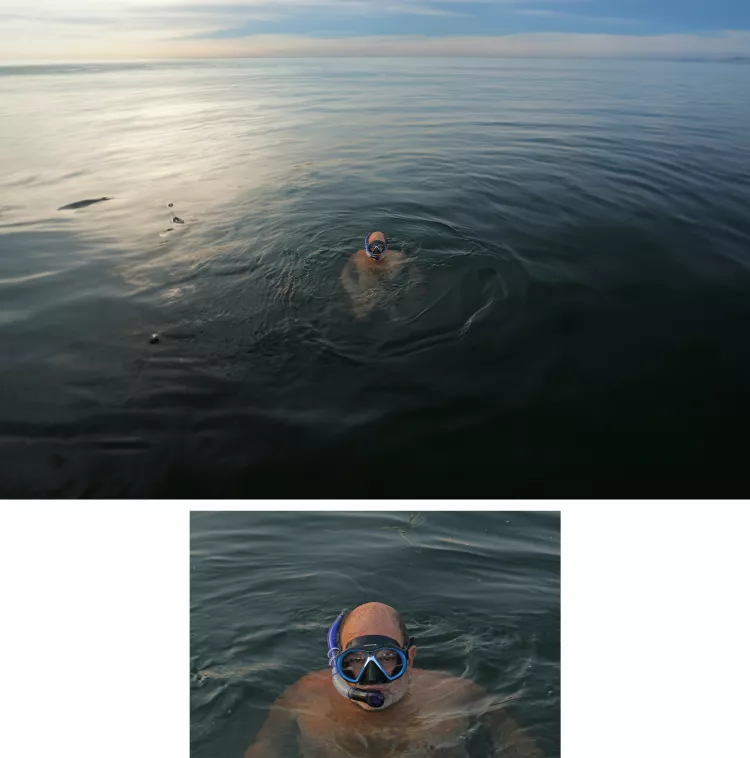

Another approach is when there is enough detail in the margins for the system to “get creative,” and instead of just filling in drab negative space to give the impression of distance, it comes up with interesting possible environments. Image 9 is a selfie that I took while snorkeling under a dock in a lake with a lot of bottom grass. Image 10 is what GAI came up with.

Sometimes the system gets this right, and sometimes it makes guesses that are not what we would call accurate. For example, Image 11 is the original tight crop of the face of a fish. My original attempt (with no prompts) resulted in the system guessing “wrong.” Even though a human recognizes this as a fish, the system has no real knowledge—it just makes assumptions based on a massive image database. For this photo, the closest match (used to generate the neck) looked more amphibian (Image 12).

So, I decided to try giving the system a prompt for this photo, and suggested that it create a neck that looked like a snake. I also gave it more space on the right side of the frame, and it came up with an amazing but disturbing image (Image 13).

I am having a lot of fun with this, and these images were the result of just a few days of playing around with generative fill. I am continuing to work on this and figure out various ways of tweaking the output to get better results. These features are now out of beta testing, so if you have the current version of Adobe Photoshop, they are available for use, along with some helpful tutorials.

Is it cheating?

One final thing. I want to address a common question that I get when I lecture about postproduction work on images. Is this “cheating”? Some photographers feel that any sort of image manipulation is inappropriate, and that the photo is what comes out of the camera. I personally disagree.

We spend huge amounts of money on better and better technology to improve the quality of our images—high-end sensors, fast lenses, powerful strobes, etc. Why is technology used AFTER the shutter is pressed any different on principle? I am just trying to make the best images that I can, so how is cleaning up a shot by masking out a bit of backscatter off limits, while angling my strobes out to do the same thing is fair play?

The one exception I make is if the post-processing is done to deceive. For example, if someone is trying to sell a dive trip and they put a whale shark in the background of a reef shot—that is not right. Other than that, my work is not done until I publish the photo.

Give this a try, you will be surprised how you can salvage many of your “meh” images with generative fill! ■